I am a PhD researcher in Artificial Intelligence and Music at Queen Mary University of London, funded by the UKRI AI & Music CDT.

Music plays a pivotal role in media, serving as a potent device for storytelling and audience immersion. Beyond mere accompaniment, music holds the power to subtly foreshadow events and evoke emotions, enhancing the narrative experience.

Current AI-driven music generation techniques, while advancing rapidly, often fall short of capturing the nuanced musicality demanded by narrative-driven media. Frequently, these tools tend to solely prioritise emotional expression at the expense of musical sophistication. This limitation is exacerbated by the absence of professional composers in the development process, often resulting in tools created by individuals lacking in-depth music knowledge or specialisation in media composition.

Furthermore, many existing AI music generation systems tend to adopt a fully generative approach, sidelining the input and creative authority of composers. This approach risks diluting the composer’s unique artistic identity and undermines the collaborative potential between human creativity and computational assistance. Therefore, there is a pressing need for innovative solutions that not only harness the capabilities of AI but also empower composers to maintain their individuality and artistic vision.

My work explores the intersection of generative music composition, emotion-driven story- telling, and human-computer co-creativity within the realms of film and video game media. With a focus on enhancing narrative immersion through music, the research endeavors to bridge the gap between traditional compositional techniques and computational methodologies.

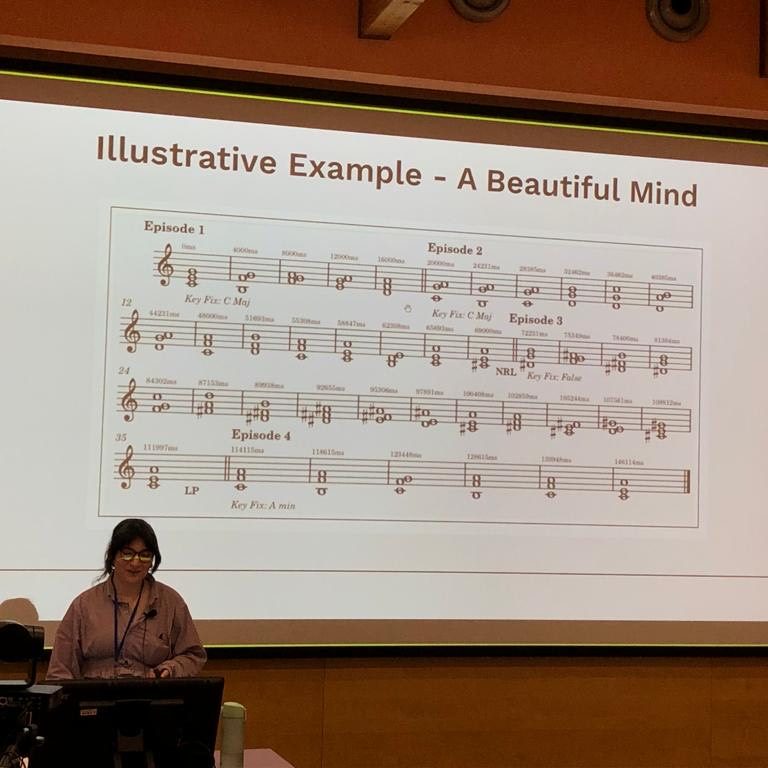

Drawing from musicology, music theory, and music cognition principles, this project aims to develop a novel tool, which leverages Neo-Riemannian theory’s associative capabilities. By linking these foundational concepts, the tool will facilitate the adaptive integration of leitmotifs into media compositions, thereby enriching the storytelling experience.

Central to this endeavor is the emphasis on fostering human-computer co-creativity. Rather than replacing composers, the tool will serve as a collaborative partner, empowering them to retain ownership and develop their unique artistic voice. By mitigating compositional barriers and alleviating time constraints inherent in media scoring, the tool will support composers in overcoming creative blocks and enhance their capacity for expressive storytelling.

Through the synthesis of musicological theory, computational techniques, and creative em- powerment, this thesis contributes to the advancement of generative music composition practices for narrative-driven media, facilitating a harmonious collaboration between human creativity and computational assistance.